Version-Release number of selected component (if applicable): util-linux-2.27.1-2.fc23.x8664 How reproducible: Always Steps to Reproduce: 1. Dd if= of= bs=1M count=20 2. Wipefs -a /dev/sdb123 3. Wipefs -a /dev/sdb Actual results: # wipefs -a /dev/sdb123 /dev/sdb1: 5 bytes were erased at offset 0x00008001 (iso9660. Wipefs -a on all of the drives. This thread is archived. New comments cannot be posted and votes cannot be cast. If you didn't destroy the old pool you will probably have to labelclear the disks. CodeInventors WipeFS is a practical and easy to understand software solution designed to help you completely erase free disk space on specified system drives in order to ensure no files can be.

|

How do I report bugs and issues?

Please report bugs on Bugzilla on kernel.org (requires registration) setting the component to Btrfs, and report bugs and issues to the mailing list (linux-btrfs@vger.kernel.org; you are not required to subscribe). For quick questions you may want to join the IRC #btrfs channel on freenode (and stay around for some time in case you do not get the answer right away).

Social media feeda social feed for your instagram content. Please use btrfs-progs somewhere in the bug subject if you're reporting a bug for the userspace tools.

Never use the Bugzilla on 'original' Btrfs project page at Oracle.

If you include kernel log backtraces in bug reports sent to the mailing list, please disable word wrapping in your mail user agent to keep the kernel log in a readable format.

Airmail sorting division tamil nadu. Airmail Sorting Division: All matters related to the Unit A. Kamal Basha: Superintendent RMS: O/o the Superintendent RMS, Chennai Sorting Division, Chennai - 600.

Please attach files (like logs or dumps) directly to the bug and don't use pastebin-like services.

I can't mount my filesystem, and I get a kernel oops!

First, update your kernel to the latest one available and try mounting again. If you have your kernel on a btrfs filesystem, then you will probably have to find a recovery disk with a recent kernel on it.

Second, try mounting with options -o recovery or -o ro or -o recovery,ro (using the new kernel). One of these may work successfully.

Finally, if and only if the kernel oops in your logs has something like this in the middle of it,

then you should try using Btrfs-zero-log.

Filesystem can't be mounted by label

See the next section.

Only one disk of a multi-volume filesystem will mount

If you have labelled your filesystem and put it in /etc/fstab, but you get:

or if one volume of a multi-volume filesystem fails when mounting, but the other succeeds:

Then you need to ensure that you run a btrfs device scan first:

This should be in many distributions' startup scripts (and initrd images, if your root filesystem is btrfs), but you may have to add it yourself.

My filesystem won't mount and none of the above helped. Is there any hope for my data?

Maybe. Any number of things might be wrong. The restore tool is a non-destructive way to dump data to a backup drive and may be able to recover some or all of your data, even if we can't save the existing filesystem.

Defragmenting a directory doesn't work

Running this:

does not defragment the contents of the directory.

This is by design. btrfs fi defrag operates on the single filesystem object passed to it, e.g. a (regular) file. When ran on a directory, it defragments the metadata held by the subvolume containing the directory, and not the contents of the directory. If you want to defragment the contents of a directory, you have to use the recursive mode with the -r flag (see recursive defragmentation).

Compression doesn't work / poor compression ratios

First of all make sure you have passed 'compress' mount option in fstab or mount command. If yes, and ratios are unsatisfactory, then you might try 'compress-force' option. This way you make the btrfs to compress everything. The reason why 'compress' ratios are so low is because btrfs very easily backs out of compress decision. (Probably not to waste too much CPU time on bad compressing data).

Copy-on-write doesn't work

You've just copied a large file, but still it consumed free space. Try:

I get the message 'failed to open /dev/btrfs-control skipping device registration' from 'btrfs dev scan'

You are missing the /dev/btrfs-control device node. This is usually set up by udev. However, if you are not using udev, you will need to create it yourself:

You might also want to report to your distribution that their configuration without udev is missing this device.

How to clean up old superblock ?

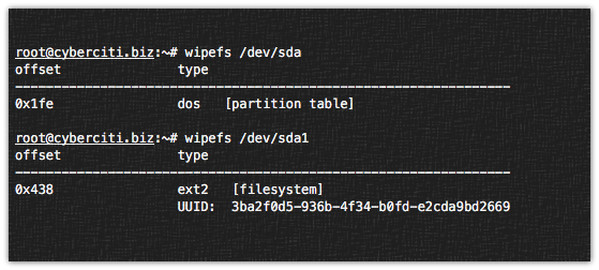

The preferred way is to use the wipefs utility that is part of the util-linux package. Running the command with the device will not destroy the data, just list the detected filesystems:

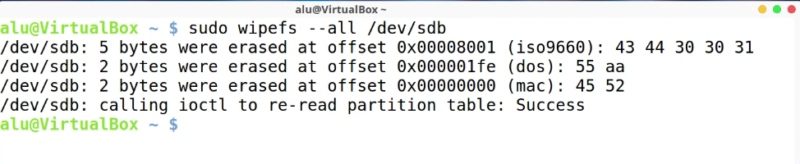

To actually remove the filesystem use:

ie. copy the offset number to the commandline parameter.

Related problem:

Long time ago I created btrfs on /dev/sda. After some changes btrfs moved to /dev/sda1.

Use wipefs as well, it deletes only a small portion of sda that will not interfere with the next partition data.

What if I don't have wipefs at hand?

There are three superblocks: the first one is located at 64K, the second one at 64M, the third one at 256GB. The following lines reset the magic string on all the three superblocks

If you want to restore the superblocks magic string,

I get 'No space left on device' errors, but df says I've got lots of space

First, check how much space has been allocated on your filesystem:

Note that in this case, all of the devices (the only device) in the filesystem are fully utilised. This is your first clue.

Next, check how much of your metadata allocation has been used up:

Note that the Metadata used value is fairly close (75% or more) to the Metadata total value, but there's lots of Data space left. What has happened is that the filesystem has allocated all of the available space to either data or metadata, and then one of those has filled up (usually, it's the metadata space that does this). For now, a workaround is to run a partial balance:

Wipefs Command

Note that there should be no space between the -d and the usage. This command will attempt to relocate data in empty or near-empty data chunks (at most 5% used, in this example), allowing the space to be reclaimed and reassigned to metadata.

If the balance command ends with 'Done, had to relocate 0 out of XX chunks', then you need to increase the 'dusage' percentage parameter till at least one chunk is relocated.More information is available elsewhere in this wiki, if you want to know what a balance does, or what options are available for the balance command.

I cannot delete an empty directory

First case, if you get:

- rmdir: failed to remove ‘emptydir’: Operation not permitted

then this is probably because 'emptydir' is actually a subvolume.

You can check whether this is the case with:

To delete the subvolume you'll have to run:

Second case, if you get:

- rmdir: failed to remove ‘emptydir’: Directory not empty

then you may have an empty directory with a non-zero i_size.

You can check whether this is the case with:

Wipefs Pmbr

Running 'btrfs check' on that (unmounted) filesystem will confirm the issue and list other problematic directories (if any).

You will get a similar output (excerpt):

Such errors should be fixable with 'btrfs check --repair' provided you run a recent enough version of btrfs-progs.

Note that 'btrfs check --repair' should not be used lightly as in some cases it can make a problem worse instead of fixing anything.

balance will reduce metadata integrity, use force if you want this

This means that conversion will remove a degree of metadata redundancy, for example when going from profile raid1 or dup to single

unable to start balance with target metadata profile 32

Excel online trendline. This means that a conversion has been attempted from profile raid1 to dup with btrfs-progs earlier than version 4.7.

parent transid verify failed

Example:

- 4316004352 is the byte offset of the metadata block

- 289 expected generation of the block

- 283 generation found in the block

Linux Wipefs

Under normal circumstances the generation numbers must match. A mismatch can be caused by alost write after a crash (ie. a dangling block 'pointer'; software bug, hardware bug), misdirected write (the block was never written to that location; software bug, hardware bug).

Name

wipefs - wipe a filesystem signature from a deviceSynopsis

wipefsWipefs Dd

[-ahnp] [-ooffset] deviceDescription

wipefs allows to erase filesystem or raid signatures (magic strings) from thedevice to make the filesystem invisible for libblkid. wipefs does not erase the whole filesystem or any other data from the device. When usedwithout options -a or -o, it lists all visible filesystems and offsets of their signatures.Options

Author

Karel Zak <kzak@redhat.com>.Wipefs Linux Usage

Availability

The wipefs command is part of the util-linux-ng package and is available fromftp://ftp.kernel.org/pub/linux/utils/util-linux-ng/.See Also

blkid(8) findfs(8)